Integrating large language models (LLMs) into production systems often reveals a fundamental challenge: their outputs are inherently unstructured and unpredictable.

Whether it's missing fields, malformed formats, or incorrect data types, these inconsistencies hinder reliability and scalability.

The solution? Leverage Pydantic, a Python library that enables runtime data validation using type annotations.

With LLMs like MistralAI (and many others) supporting structured outputs via JSON schemas, combining these tools ensures AI-generated data adheres to strict schemas.

In this guide, we’ll walk through a simple but practical real-world example that:

- Uses the Mistral API to generate structured JSON from a CSV input.

- Validates that output using a

Pydanticmodel. - Implements a retry mechanism for failed validation attempts with an improved prompt.

Full source code available at: https://github.com/nunombispo/PydanticLLMs-Article

If you prefer, check out the video version:

This book offers an in-depth exploration of Python's magic methods, examining the mechanics and applications that make these features essential to Python's design.

Understanding Pydantic

Pydantic is a powerful data validation and parsing library in Python, built around the concept of using standard Python type hints to define data models.

At its core, Pydantic enforces that incoming data matches the specified schema, automatically converting types and raising errors when expectations aren't met.

Originally developed for use with web frameworks like FastAPI, Pydantic has found widespread adoption in domains where data integrity and clarity are critical, including AI and machine learning workflows.

By turning Python classes into data contracts, Pydantic helps eliminate the guesswork often associated with dynamic or external inputs.

Key Features

- Runtime Type Checking: Pydantic enforces type annotations at runtime, ensuring that all incoming data adheres to the expected types. If a mismatch occurs, it raises detailed validation errors that are easy to debug.

- Automatic Data Parsing and Serialization: Whether you receive input as strings, dictionaries, or nested structures, Pydantic will automatically parse and coerce data into the appropriate Python objects. It can also serialize models back to JSON or dictionaries for API responses or storage.

- Integration with Python Type Hints: Models are defined using familiar Python syntax with type annotations, making it intuitive for developers to describe complex data shapes. This also enables static analysis tools and IDEs to provide better support and autocomplete suggestions.

If you are interested in learning more about Pydantic, check out my other articles:

https://developer-service.blog/mastering-pydantic-a-guide-for-python-developers/

https://developer-service.blog/best-practices-for-using-pydantic-in-python/

https://developer-service.blog/beyond-pydantic-7-game-changing-libraries-for-python-data-handling/

The Importance of Structured Outputs in AI

There are three main points when defining the importance of structured outputs for AI responses.

Consistency

Structured outputs provide a consistent format for AI-generated data, which is essential for seamless downstream processing.

When data adheres to a predefined schema, it becomes straightforward to parse, transform, and integrate into various systems such as databases, APIs, or analytic pipelines.

Consistency eliminates guesswork, reduces the need for custom error-prone parsing logic, and enables automation at scale.

Reliability

AI models, LLMs, can generate diverse and unpredictable outputs.

This variability can lead to failures if systems expect data in a specific format but receive something unexpected instead.

By enforcing structure through validation, the risk of runtime errors, crashes, or corrupted data is significantly reduced.

Reliable data outputs increase confidence in the AI system’s behavior, making it safer to deploy in production environments.

Security

Unvalidated or poorly structured inputs and outputs can expose applications to security vulnerabilities such as injection attacks, malformed data exploitation, or denial-of-service scenarios.

Structured data validation acts as a safeguard, ensuring that only well-formed, type-safe data is accepted and processed.

This reduces the attack surface and helps maintain the integrity and confidentiality of AI-driven systems.

Mug Trust Me Prompt Engineer Sarcastic Design

A sarcastic "prompt badge" design coffee mug, featuring GPT-style neural network lines and a sunglasses emoji.

Perfect for professionals with a sense of humor, this mug adds a touch of personality to your morning routine.

Ideal for engineers, tech enthusiasts, and anyone who appreciates a good joke.

Great for gifting on birthdays, holidays, and work anniversaries.

Practical Example: From CSV to Validated JSON

Let's consider the example of processing a CSV file containing data about users, which can have some incomplete data, into structured JSON representing user profiles.

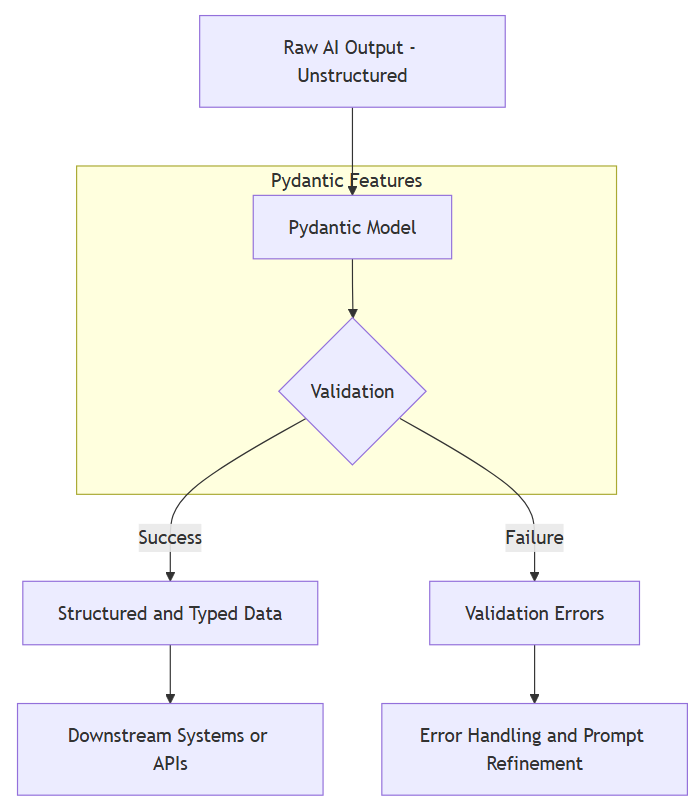

In terms of flow, we will implement this logic:

Define Pydantic Model

import os

import json

from pydantic import BaseModel, ValidationError

from mistralai import Mistral

# -----------------------------

# Pydantic Model for Validation

# -----------------------------

class Person(BaseModel):

name: str

age: int

email: strHere we define a simple Pydantic model to ensure the schema of our intended JSON.

Function to Call MistralAI API with JSON Mode

# --------------------------------------

# Function to Call Mistral in JSON Mode

# --------------------------------------

def call_mistral_json_mode(prompt: str, system_message: str = "") -> str:

"""Call the Mistral API with a prompt and optional system message, expecting a JSON object response."""

api_key = os.environ.get("MISTRAL_API_KEY")

if not api_key:

raise RuntimeError("Please set the MISTRAL_API_KEY environment variable.")

model = "mistral-large-latest"

client = Mistral(api_key=api_key)

messages = [

{"role": "user", "content": prompt},

{"role": "system", "content": system_message},

]

chat_response = client.chat.complete(

model=model,

messages=messages,

response_format={"type": "json_object"},

)

return chat_response.choices[0].message.contentHere, we are calling the Mistral API with the model mistral-large-latest and enforcing the response to be a json_object.

Note: Mistral AI provides a chat.parse method that receives a Pydantic model directly as the response_format. For this example, I kept the logic generic so it can be used with other LLMs.

Read CSV file

# -----------------------------

# Read CSV Input from File

# -----------------------------

with open("example_incomplete.csv", "r", encoding="utf-8") as f:

csv_input = f.read().strip()Here, we simply read the CSV into a variable.

The CSV used in this example is:

name,age,email

Alice,30,alice@example.com

Bob,,bob@example.com

Charlie,40,

Diana,25,diana@example.com Initial Prompt and AI Response

# -----------------------------

# Initial Prompt Construction

# -----------------------------

model_json_schema = Person.model_json_schema()

prompt = f"""

Given the following CSV data, return a JSON array of objects with fields: {Person.model_json_schema()}

CSV:

{csv_input}

Example output:

[

{{"name": "Alice", "age": 30, "email": "alice@example.com"}},

{{"name": "Bob", "age": 25, "email": "bob@example.com"}}

]

"""

print("\n" + "="*50)

print("Mistral CSV to Structured Example: Attempt 1")

print("="*50 + "\n")

response = call_mistral_json_mode(prompt)

print("Mistral response:\n", response)Here, we are defining the initial prompt and calling the Mistral API with the helper function call_mistral_json_mode.

Validation and Retry Loop

This article is for paid members only

To continue reading this article, upgrade your account to get full access.

Subscribe NowAlready have an account? Sign In