Recommendation systems are essential in today's digital landscape.

They enhance user engagement by suggesting relevant content, products, or services.

From Netflix recommending movies to Amazon suggesting products, these systems analyze user behavior and item attributes to deliver personalized experiences.

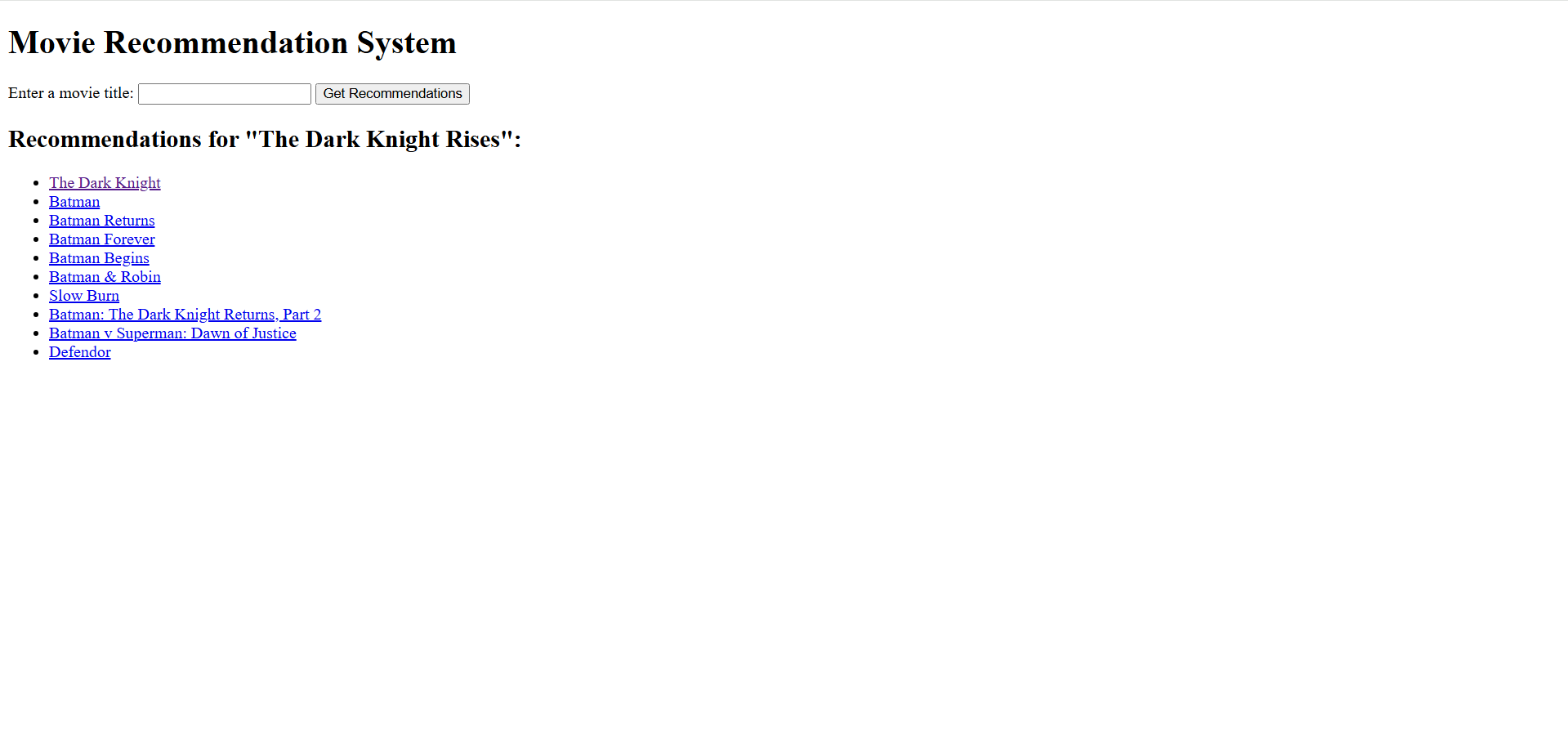

In this guide, we'll build a content-based recommendation system using Python and integrate it into a Flask web application.

Understanding Recommendation Systems

Recommendation systems are algorithms that predict user preferences and suggest items accordingly.

They are essential for:

- Enhancing user experience by providing personalized content

- Increasing engagement and retention rates

- Boosting sales and conversions in e-commerce platforms

There are three main types of recommendation systems:

- Content-Based Filtering: Recommends items similar to those a user liked in the past based on item attributes.

- Collaborative Filtering: Recommends items that similar users liked, focusing on user behavior patterns.

- Hybrid Systems: Combine content-based and collaborative filtering methods to leverage both strengths.

In this guide, we'll focus on content-based filtering.

Ready to enhance your coding skills? Check out Developer Service Blog Courses for beginner-friendly lessons on core programming concepts.

Start learning today and build your path to becoming a confident developer! 🚀

Preparing the Dataset

For this article and examples, we'll use the TMDB 5000 Movie Dataset from Kaggle.

This dataset contains information about 5,000 movies, including their titles, overviews, genres, and more.

Note: You'll need a Kaggle account to download the dataset.

Downloading the Dataset

- Go to the TMDB 5000 Movie Dataset page.

- Sign in to your Kaggle account.

- Download the

tmdb_5000_movies.csvfile.

Loading and Inspecting the Dataset

Create a Python script named recommendation_engine.py and start by loading the dataset:

import pandas as pd

# Load the dataset

df = pd.read_csv('tmdb_5000_movies.csv')

# Display the first few rows

print(df.head())

Don't forget to first install pandas with:

pip install pandasOutput:

budget ... vote_count

0 237000000 ... 11800

1 300000000 ... 4500

2 245000000 ... 4466

3 250000000 ... 9106

4 260000000 ... 2124

[5 rows x 20 columns]Data Preprocessing

We need to preprocess the data to extract useful information for our recommendation engine.

We'll use the following features:

- Title: The name of the movie.

- Overview: A brief description of the movie.

- Genres: The genres associated with the movie.

- Keywords: Important keywords related to the movie.

Handling JSON Fields

Some fields like genres and keywords are stored as strings representing lists of dictionaries.

We'll need to parse these strings into actual Python lists.

import ast

def parse_features(x):

try:

return [i['name'] for i in ast.literal_eval(x)]

except Exception as e:

return []

# Apply the function to relevant columns

df['genres'] = df['genres'].apply(parse_features)

df['keywords'] = df['keywords'].apply(parse_features)Creating a 'Soup' of Metadata

We'll combine all relevant textual information into a single string for each movie.

def clean_data(x):

if isinstance(x, list):

return ' '.join(x)

else:

# Check for NaN

if isinstance(x, str):

return x

else:

return ''

# Apply the cleaning function

for feature in ['genres', 'keywords']:

df[feature] = df[feature].apply(clean_data)

# Combine all features into a 'soup'

def create_soup(row):

return ' '.join([str(row['keywords']), str(row['genres']), str(row['overview'])])

df['soup'] = df.apply(create_soup, axis=1)Handling Missing Values

Ensure that there are no missing values in the soup column.

df['soup'] = df['soup'].fillna('')

Explaining the Algorithms

To understand how our recommendation engine works, let's delve into the key algorithms involved.

TF-IDF Vectorization

TF-IDF stands for Term Frequency-Inverse Document Frequency.

It's a technique used to determine how important a word is to a particular document within a larger collection of documents.

- Term Frequency (TF) counts how many times a word appears in a single document. The idea is that words appearing more frequently in a document might be more significant to that document's content.

- Inverse Document Frequency (IDF) measures how common or rare a word is across all documents. If a word appears in many documents, it becomes less useful for identifying unique content, so it gets a lower score.

By combining these two measures, TF-IDF gives higher scores to words that are important in a specific document but not common in other documents.

This helps in highlighting the unique words that best describe the document's content.

Cosine Similarity

Cosine Similarity is a way to measure how similar two documents are, regardless of their size.

Imagine representing each document as a list of numbers based on word importance (like the TF-IDF scores).

Cosine Similarity calculates the angle between these two lists when plotted in multi-dimensional space.

- If the angle is small (the cosine value is close to 1), the documents are very similar—they share a lot of important words.

- If the angle is large (the cosine value is close to 0), the documents are quite different—they don't share many important words.

This method allows us to quantify the similarity between documents based on their content, making it easier to find and recommend documents that are alike.

Building the Recommendation Engine

Now, let's implement the recommendation engine using the algorithms we've discussed.

Step 1: Import Required Libraries

First, install the required libraries with pip:

pip install scikit-learnThen you can import them:

from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

from sklearn.metrics.pairwise import cosine_similarity

Step 2: TF-IDF Vectorization

We can use TF-IDF for textual data like the overview.

# Initialize the TF-IDF Vectorizer

tfidf = TfidfVectorizer(stop_words='english')

# Replace NaN with an empty string

df['overview'] = df['overview'].fillna('')

# Compute TF-IDF matrix for the 'overview' field

tfidf_matrix = tfidf.fit_transform(df['overview'])

Step 3: Compute Cosine Similarity Matrix for Overviews

# Compute the cosine similarity matrix

cosine_sim_overview = cosine_similarity(tfidf_matrix, tfidf_matrix)

Step 4: Count Vectorization for Metadata 'Soup'

For the combined metadata, we'll use Count Vectorizer since it performs better for shorter texts.

# Initialize the Count Vectorizer

count = CountVectorizer(stop_words='english')

# Compute the count matrix

count_matrix = count.fit_transform(df['soup'])

Step 5: Compute Cosine Similarity Matrix for Metadata

# Compute the cosine similarity matrix

cosine_sim_metadata = cosine_similarity(count_matrix, count_matrix)

Step 6: Combining Similarities

Optionally, we can combine both similarity matrices to improve recommendations.

# Average the two similarity matrices

cosine_sim = (cosine_sim_overview + cosine_sim_metadata) / 2

Step 7: Building the Recommendation Function

Create a mapping from movie titles to indices.

# Reset index of the DataFrame and construct reverse mapping

df = df.reset_index()

indices = pd.Series(df.index, index=df['title']).drop_duplicates()

Implement the recommendation function.

This article is for paid members only

To continue reading this article, upgrade your account to get full access.

Subscribe NowAlready have an account? Sign In