Working with LLMs often starts with excitement and quickly turns into frustration.

You wire together a few tools, throw in some prompts, maybe add LangChain or OpenAI function calling, and it kind of works. Until it doesn’t.

You tweak a prompt, and suddenly the agent can’t find the right tool. You add a new function, and now you’re debugging JSON schema errors from a black box.

You try to test locally and realize… You can’t. Not easily, not meaningfully.

The problems pile up fast:

- Prompt chains are fragile and impossible to validate.

- OpenAI tool calling is great until you need flexibility.

- LangChain does everything, but it’s heavy, slow, and hard to reason about.

- Local-first development? Forget it.

What should be a clean loop, define tools → expose them → test the agent → ship, turns into duct tape and guesswork.

“I just want to define some tools, wire up an agent, and test the damn thing.”

FastMCP was built to solve exactly this. No orchestration glue. No hidden layers. Just Pythonic, testable, LLM-safe tools that behave the way you expect, because you wrote them that way.

If you prefer the video version:

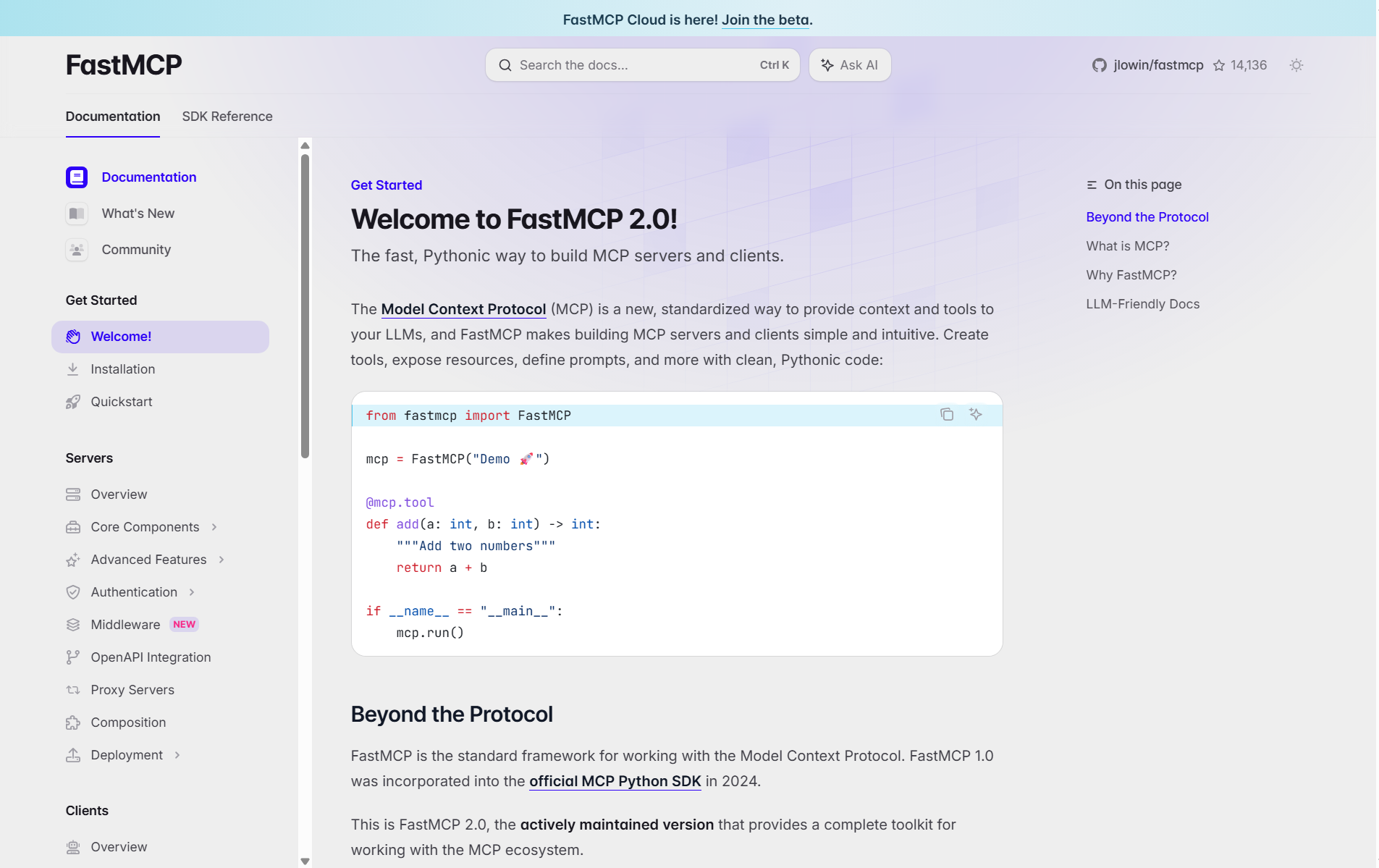

What is FastMCP?

FastMCP is a Python-first framework for building LLM-safe APIs, a structured way to expose tools, prompts, and resources to language models.

It’s the reference implementation of the Model-Context Protocol (MCP), a protocol designed to make LLM-agent interactions more predictable, inspectable, and testable.

Created by Jonathan Lowin, CEO at prefect.io, FastMCP is what happens when you take OpenAI’s tool calling seriously and reimagine it for real-world developers.

At the heart of FastMCP is a simple but powerful idea:

Everything is a tool.

Whether it’s a function, a prompt, a resource, or an agent call, it’s treated as a typed, callable object with clear inputs and outputs.

That means:

- It’s introspectable by LLMs (via JSON schemas or natural-language docs).

- It’s testable by you (in memory, without a server).

- It’s just Python (no decorators that swallow errors, no mysterious “chains”).

FastMCP doesn’t replace your model or your frontend; it replaces the glue layer between LLMs and the real world.

This book offers an in-depth exploration of Python's magic methods, examining the mechanics and applications that make these features essential to Python's design.

How FastMCP Adds Value

FastMCP isn’t trying to be everything. It’s trying to be the thing that actually works, a developer-first, LLM-native interface layer that doesn’t fight you.

Here’s what makes it stand out:

In-Memory Testing

You don’t need a running server or an OpenAI key to test your tools.

With Client(server), you can call any tool (or entire toolchain) directly in Python, just like a unit test.

No more “vibe testing” agents in production. You can validate logic locally, fast.

client = Client("server.py")

...

result = await client.call_tool("greet", {"name": name})

Typed Tool Interfaces

Every tool is defined with standard Python type hints.

FastMCP converts these into structured schemas that LLMs can understand and that developers can trust.

You’ll never wonder what a tool expects or returns. It’s all explicit and introspectable.

@mcp.tool

def greet(name: str) -> str:

...

No Boilerplate

FastMCP includes everything you need to expose a tool: type handling, JSON schemas, OpenAI tool formatting, and even a local FastAPI server (if you want one).

There’s no wiring, no adapters, no glue code. Just tools.

Composable and Modular

Tools can call other tools. Resources can be tagged and routed.

You can filter, wrap, log, override, or proxy any part of your system using middleware.

It’s like functional programming for LLM APIs—clean, composable, and testable.

LLM-Ready by Design

Whether you’re using OpenAI, Claude, or a local model, FastMCP outputs structured tool definitions, JSON-callable messages, and clear tool schemas.

LLMs don’t just “see” your tools; they understand how to use them.

In short, FastMCP gives you the benefits of a framework, structure, clarity, and speed, without the weight. It’s a developer-first toolkit for anyone building LLM-powered systems in production.

Quick Code Examples

Here are some code examples to give you an idea of how quick and simple it is to create AI-powered tools with FastMCP.

You can find the link to the full source code at the end of the article.

Before creating any of these examples, first install the FastMCP package:

pip install fastmcp

Example 1: Hello, FastMCP

In this first example, let's build a simple MCP server that exposes a tool that greets the user.

In a server.py file:

from fastmcp import FastMCP

# Create a new MCP server

mcp = FastMCP("My MCP Server")

# Create a new tool

@mcp.tool

def greet(name: str) -> str:

return f"Hello, {name}!"

# Run the server

if __name__ == "__main__":

mcp.run()

This code sets up a simple FastMCP server with one tool, greet, which returns a personalized greeting. The tool is typed, LLM-safe, and exposed via a local server with minimal setup using mcp.run().

Now, we build the client in a client.py file:

import asyncio

from fastmcp import Client

# Create a new client

client = Client("server.py")

# Call the tool

async def call_tool(name: str):

async with client:

result = await client.call_tool("greet", {"name": name})

print(result)

# Run the client asynchronously

asyncio.run(call_tool("Ford"))

This code creates an asynchronous FastMCP client that connects to a local MCP server (server.py) and calls the greet tool with the input "Ford". It uses asyncio to run the request, and prints the result returned by the tool, demonstrating how to interact with MCP tools programmatically from a client.

Let's run the example:

$ python client.py

CallToolResult(content=[TextContent(type='text', text='Hello, Ford!', annotations=None, meta=None)], structured_content={'result': 'Hello, Ford!'}, data='Hello, Ford!', is_error=False)

As you can see, we were able to test the greet tool effortlessly by running the test client script.

The FastMCP client automatically locates and connects to the specified server module (server.py), spins up the server if it's not already running, and seamlessly executes the registered tool with the given parameters.

This streamlined testing flow doesn't require extra setup, mocks, or external API calls, which makes it incredibly easy to validate tool behaviour locally, debug workflows, and iterate quickly during development.

Example 2: Toolchain Composition

Let’s say you’re building a set of tools for an LLM to:

- Look up a product by ID,

- Calculate its discounted price,

- And format a final message.

FastMCP lets you model this with clear, testable tools. File server_composition.py:

from fastmcp import FastMCP

# Create a new MCP server

mcp = FastMCP("PricingTools")

# Step 1: Fake product lookup

@mcp.tool()

def get_product(product_id: int) -> dict:

return {"id": product_id, "name": "Headphones", "price": 120.0}

# Step 2: Discount calculator

@mcp.tool()

def apply_discount(price: float, percent: float = 10.0) -> float:

return round(price * (1 - percent / 100), 2)

# Step 3: Formatter

@mcp.tool()

def format_message(name: str, final_price: float) -> str:

return f"The product '{name}' is available for ${final_price}."

# Run the server

if __name__ == "__main__":

mcp.run()

Now, use FastMCP’s in-memory client to simulate an agent calling these tools. File client_composition.py:

This article is for paid members only

To continue reading this article, upgrade your account to get full access.

Subscribe NowAlready have an account? Sign In