In this article, I’ll show you how to build a Python-based chat agent that leverages Bright Data’s Model Context Protocol (MCP) server alongside MistralAI’s chat model, orchestrated via LangChain adapters and LangGraph’s ReAct agent framework.

I cover environment setup, MCP server parameters, Python client initialization using STDIO transport, tool loading, and the asynchronous chat loop.

By following this guide, you’ll have a seamless, tool-enabled AI assistant capable of invoking web-scraping, proxy rotation, CAPTCHA solving, and other Bright Data capabilities in a chat interface powered by MistralAI.

This book offers an in-depth exploration of Python's magic methods, examining the mechanics and applications that make these features essential to Python's design.

What Is MCP?

The Model Context Protocol (MCP) is an open, JSON-RPC 2.0–based standard that lets AI models invoke external tools through a unified interface.

Think of MCP like the “USB-C port for AI applications,” providing a standardized way to plug in capabilities such as web scrapers, proxy rotation, CAPTCHA solving, and headless browsers.

Bright Data’s MCP server (@brightdata/mcp) is a Node.js implementation exposing powerful scraping and unlocking tools over STDIO or SSE transports.

By using MCP, your AI agents gain real-time, reliable access to both static and dynamic web data without the hassle of building complex scraping infrastructure.

Prerequisites

Bright Data Account & API Token

Sign up at Bright Data and create an MCP server token in your dashboard.

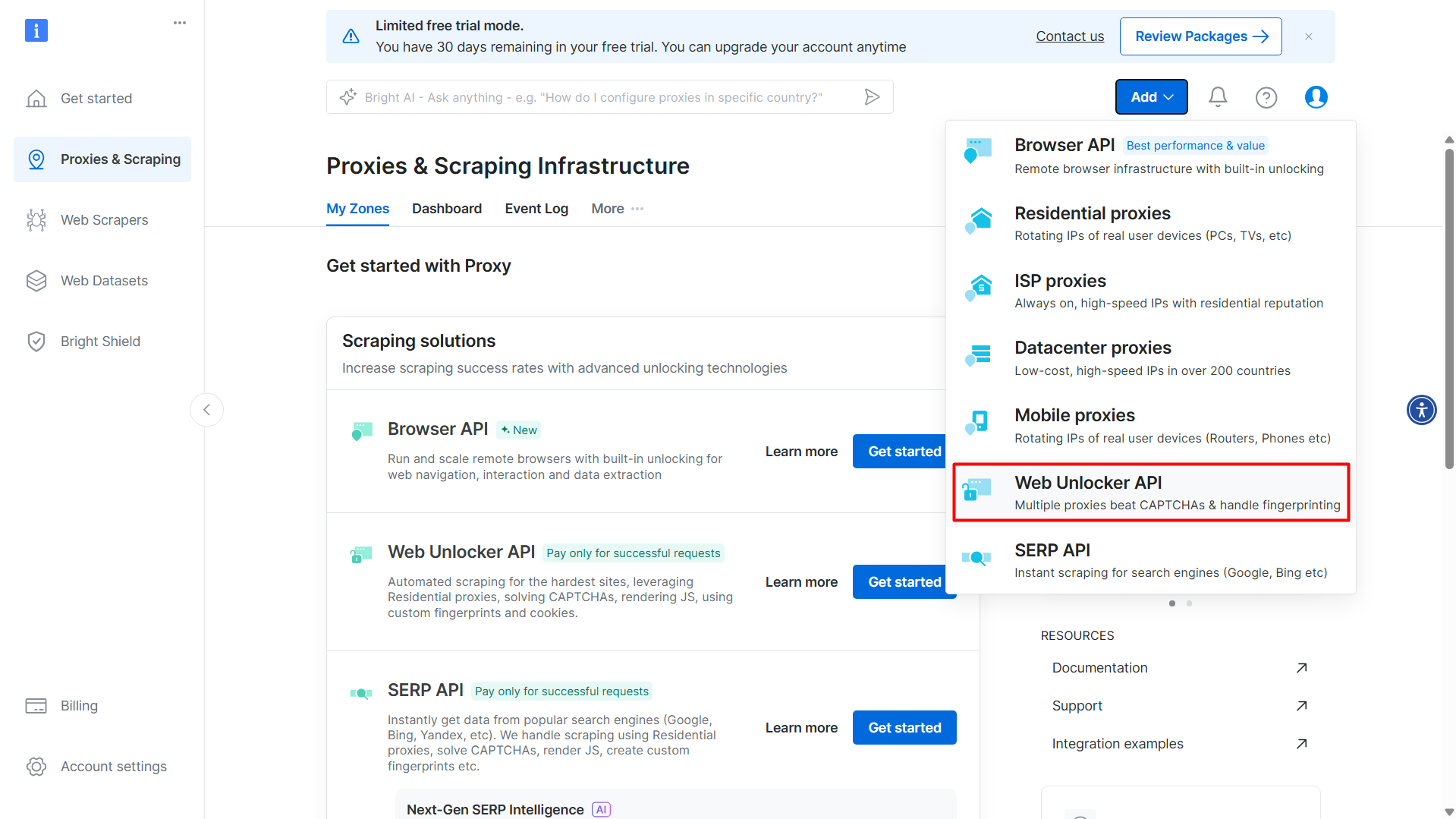

Then create a 'Web Unlocker API':

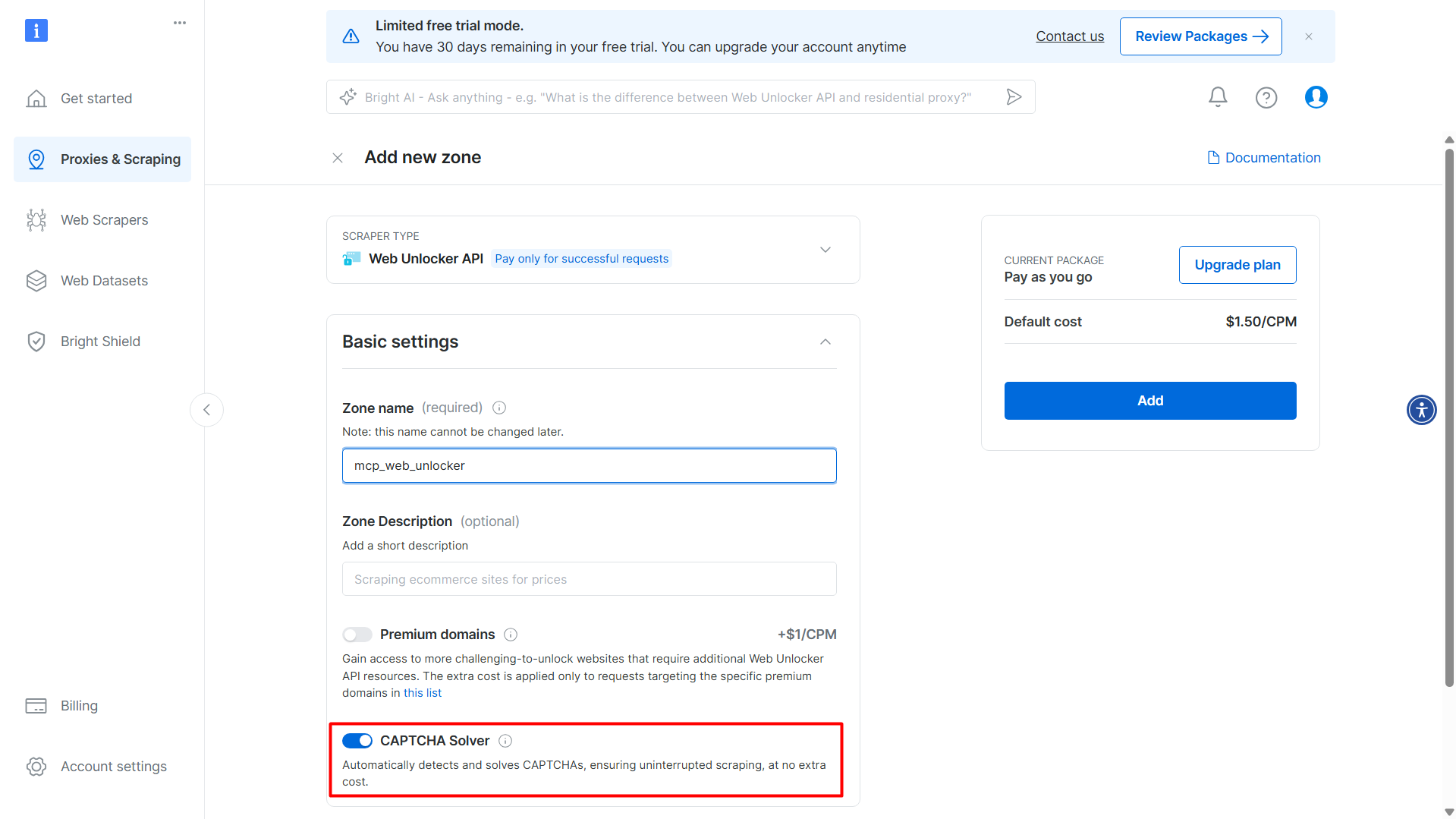

When creating it, make sure to enable 'CAPTCHA Solver' (it should be on by default):

Make sure to make note of the 'Zone name', it will be required for the 'WEB_UNLOCKER_ZONE' environment variable.

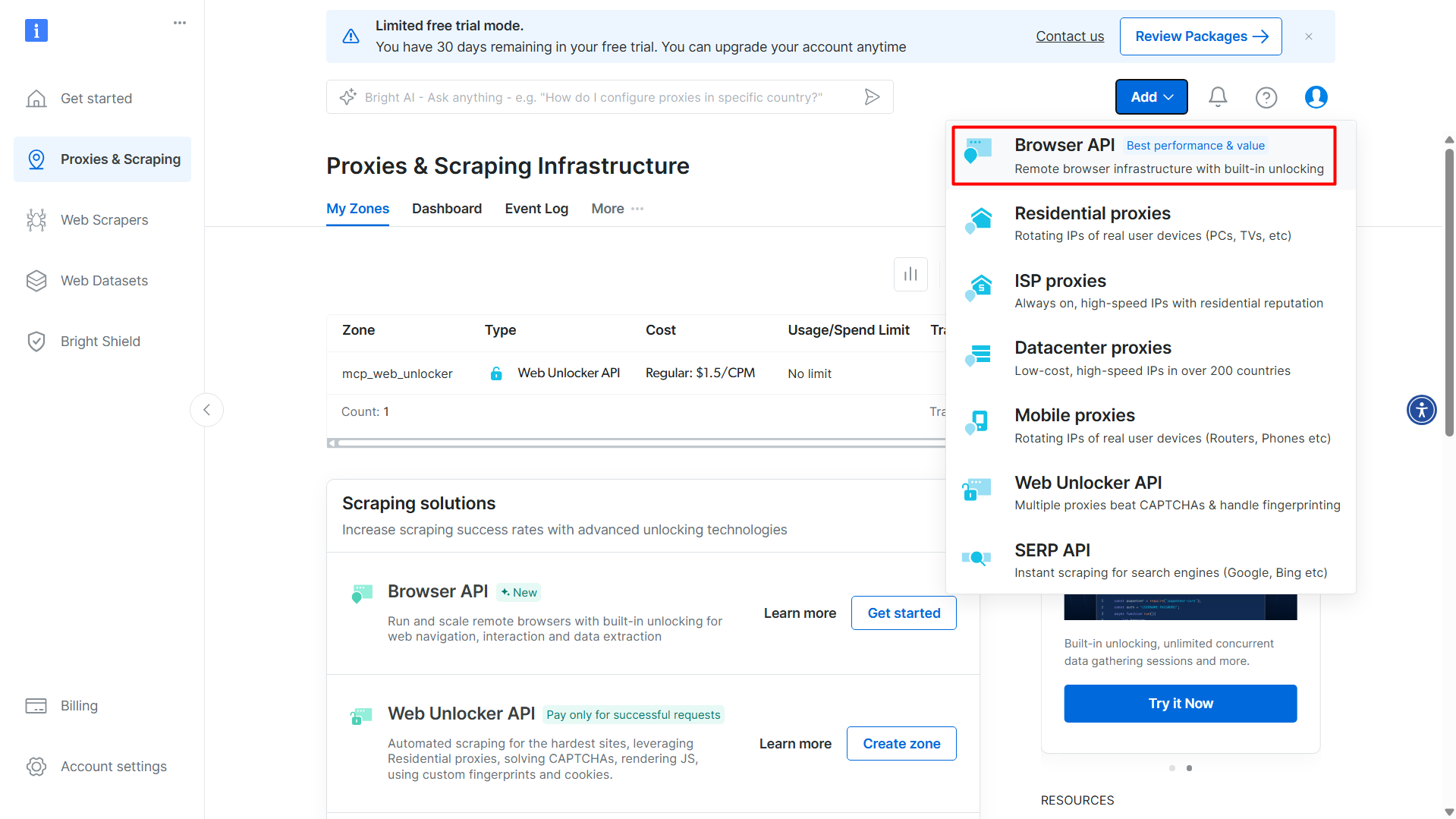

Additionally, you need to create a 'Browser API' to scrape JavaScript-heavy sites :

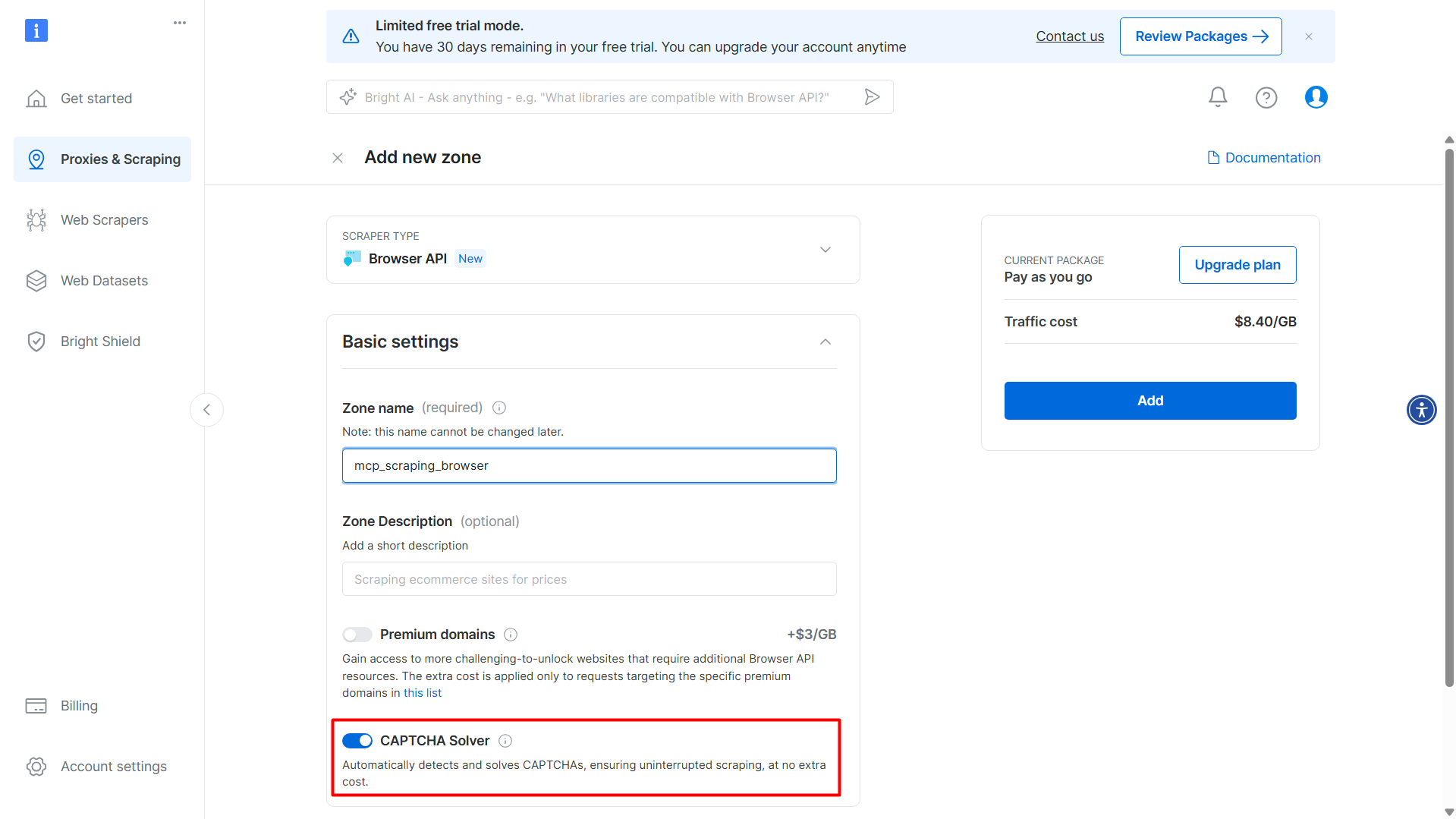

Again, when creating it, make sure to enable 'CAPTCHA Solver' (it should be on by default):

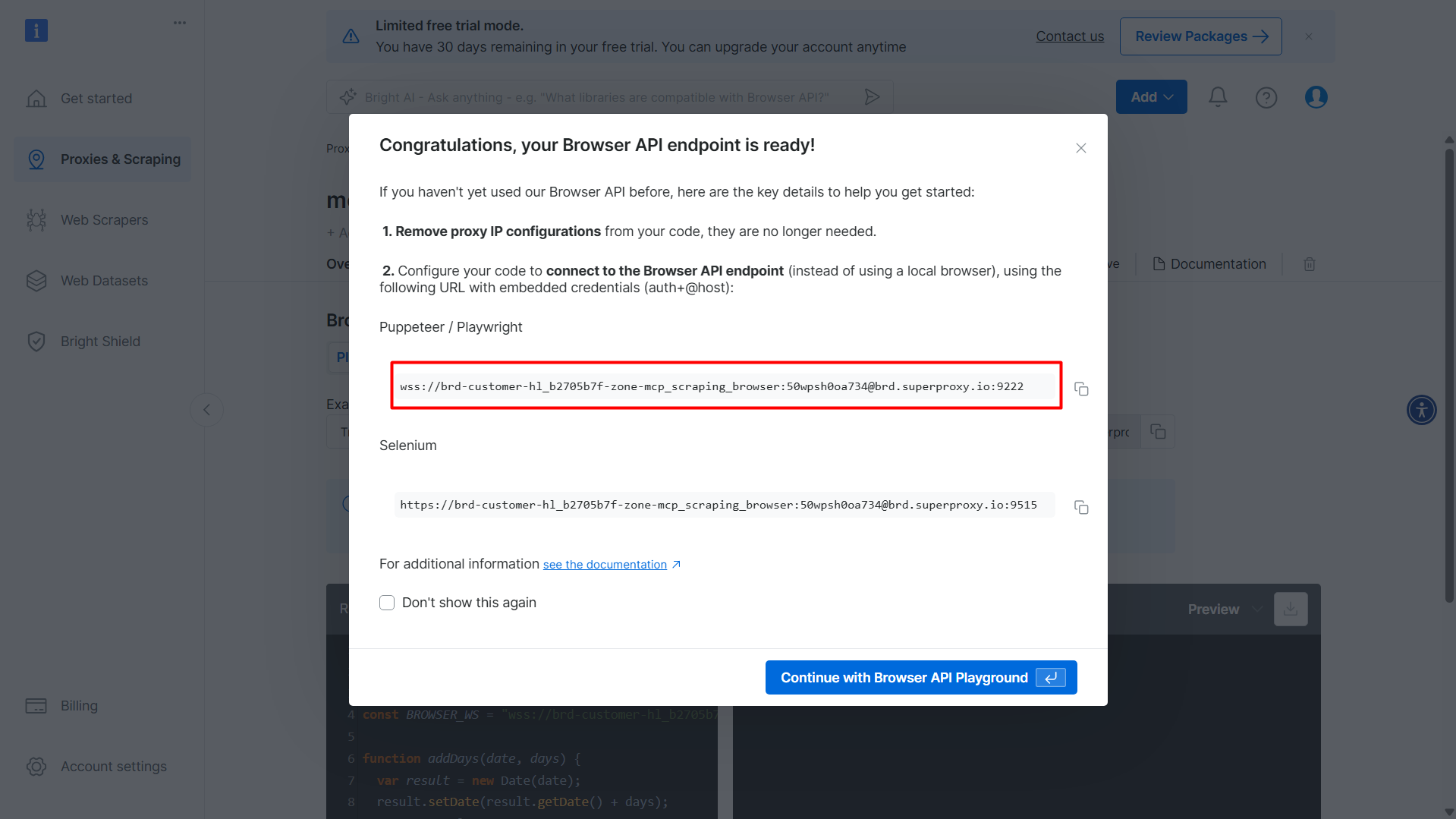

After creation, it will display the credentials:

Make note of this credential, you will need it for the 'BROWSER_AUTH' environment variable later.

For this credential, the actual value used in the environment variable is:

brd-customer-hl_b2705b7f-zone-mcp_scraping_browser:50wpsh0oa734

(You will need to remove the start and end parts of the credential)

Finally, you will need an API token, which you will find in the 'API Keys' section on the 'Account Settings'.

More info about the MCP-Server configuration details at Bright Data Docs.

Mistral AI

For using Mistral AI chat models, you will need an API key, which you can generate at the 'API Keys' section in the Admin panel.

If you want to explore more about MistralAI, check out one of my other articles: https://developer-service.blog/creating-a-natural-language-terminal-with-python-and-mistralais-codestral-mamba-7b/

Node.js & MCP Server Package

Ensure node and npx are installed.

The MCP server runs via the @brightdata/mcp npm package, which you invoke through npx (GitHub).

Python Environment

Python 3.8+ is required for this project.

Additionally, the following packages are required to be installed:langchain_mistralai langchain_mcp_adapters langgraph python-dotenv

These will be installed by uv as described in the next section.

Mug Trust Me Prompt Engineer Sarcastic Design

A sarcastic "prompt badge" design coffee mug, featuring GPT-style neural network lines and a sunglasses emoji.

Perfect for professionals with a sense of humor, this mug adds a touch of personality to your morning routine.

Ideal for engineers, tech enthusiasts, and anyone who appreciates a good joke.

Great for gifting on birthdays, holidays, and work anniversaries.

Code Walkthrough

All code is available at the GitHub repository mcp-scrape-web-article.

Let's install the requirements with:

uv pip install -r requirements.txt

Then create a .env file:

MISTRALAI_API_KEY=your_mistralai_key

API_TOKEN=your_brightdata_token

BROWSER_AUTH=your_browser_api_key

WEB_UNLOCKER_ZONE=your_zone_name

Replace the values with the values explained in the previous section.

Imports and Environment Loading

# Imports

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from langchain_mcp_adapters.tools import load_mcp_tools

from langgraph.prebuilt import create_react_agent

from langchain_mistralai import ChatMistralAI

from dotenv import load_dotenv

import asyncio

import os

# Load environment variables

load_dotenv()

We begin by loading environment variables via python-dotenv, keeping secrets out of source control.

Model Initialization

# Initialize the model

model = ChatMistralAI(model="mistral-large-latest", api_key=os.getenv("MISTRALAI_API_KEY"))

We instantiate ChatMistralAI with the mistral-large-latest model, which offers state-of-the-art conversational quality and supports streaming and tool calls.

MCP Server Parameters

# Initialize the server parameters

server_params = StdioServerParameters(

command="C:\\Program Files\\nodejs\\npx.cmd", # In Windows, other OS you can use "npx"

env={

"API_TOKEN": os.getenv("API_TOKEN"),

"BROWSER_AUTH": os.getenv("BROWSER_AUTH"),

"WEB_UNLOCKER_ZONE": os.getenv("WEB_UNLOCKER_ZONE"),

},

args=["@brightdata/mcp"],

)

StdioServerParameters wraps the shell command (npx @brightdata/mcp), environment variables, and arguments needed to launch the MCP server locally on STDIO transport.

Asynchronous Chat Function

This article is for paid members only

To continue reading this article, upgrade your account to get full access.

Subscribe NowAlready have an account? Sign In