In this article, we are embarking on an exciting project: building a ChatGPT-like application using Chainlit and MistralAI.

Creating a ChatGPT-like application is not just about replicating an existing model but also about exploring the realms of AI and natural language processing. This project provides an opportunity to delve into the fascinating world of conversational AI, understand the complexities of language models, and apply this knowledge in a practical, hands-on manner.

Whether you're a developer, an AI enthusiast, or someone curious about the potential of AI in communication, this project offers valuable insights and skills.

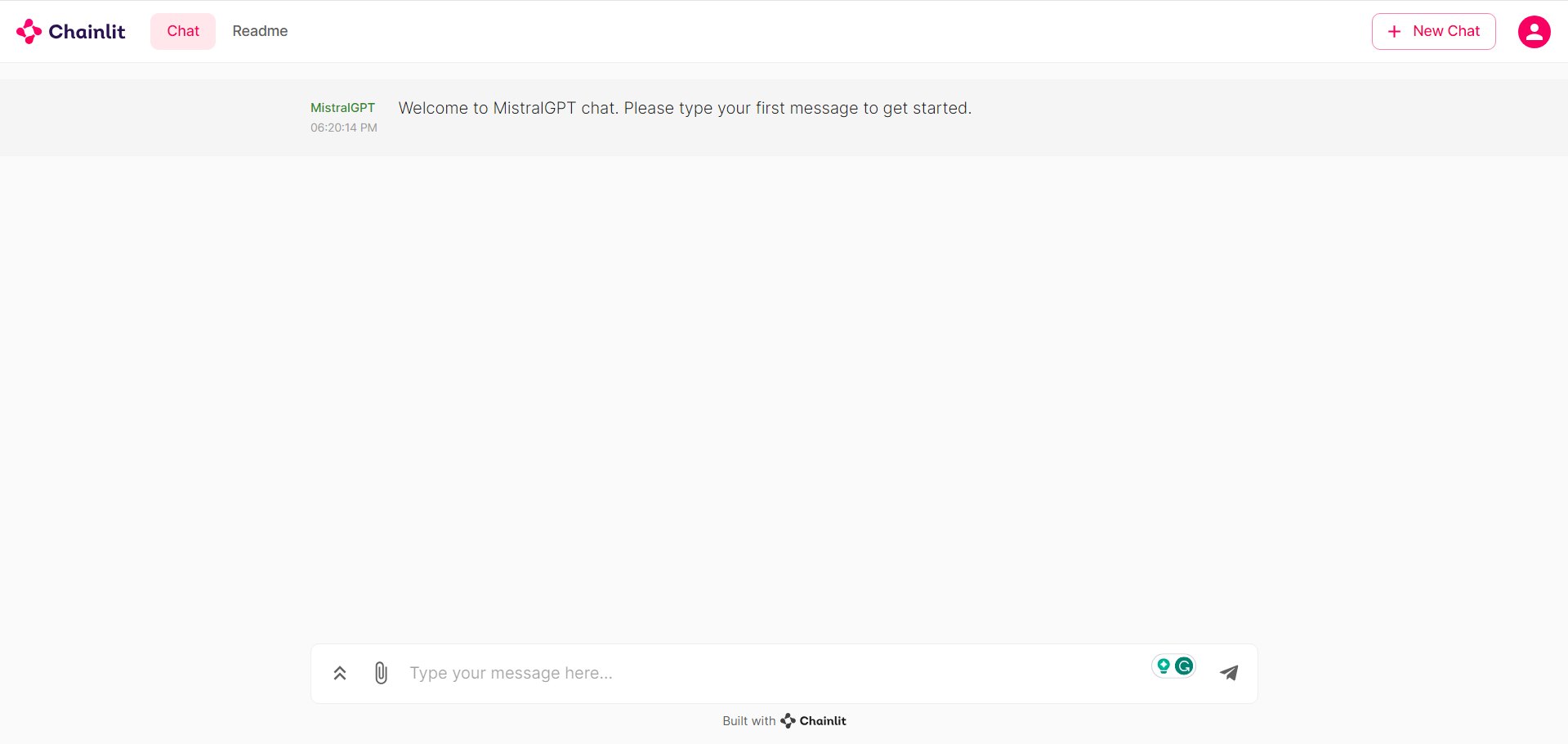

Check out the finished application in action:

Chainlit application running with MistralAI integration

You can also check out this article in video format:

Pre-requisites

Developing a ChatGPT-like application using Chainlit and MistralAI requires certain technical knowledge to ensure the successful implementation of the project.

Here are the key areas of expertise needed:

- Python Programming

- Fundamentals of AI and Machine Learning

- API Integration

- Access to MistralAI API key (more info below)

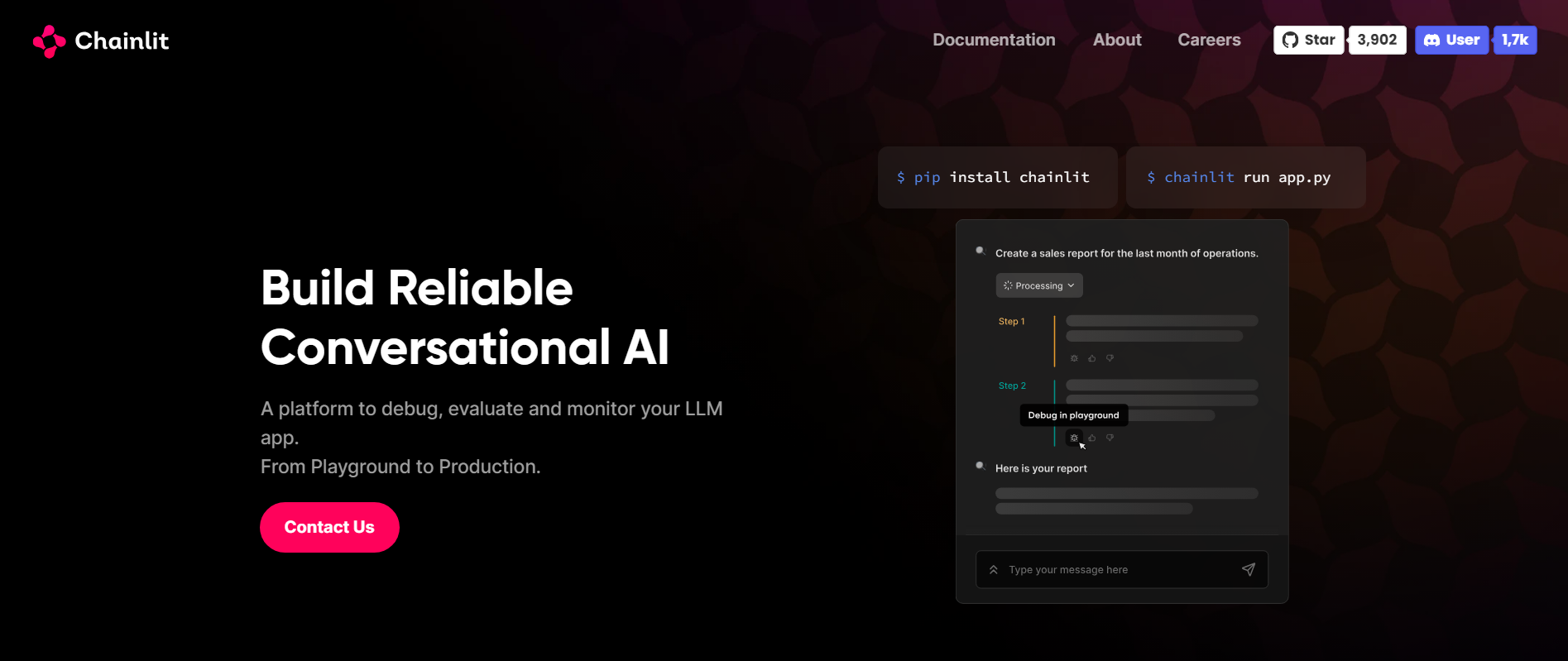

What is Chainlit?

Chainlit is an open-source Python package designed to facilitate the rapid development of Chat-like applications using your own business logic and data. It's specifically tailored for building applications with functionalities similar to ChatGPT, providing a fast and efficient way to integrate with an existing code base or to start from scratch. It offers key features such as fast build times, data persistence, the ability to visualize multi-step reasoning, and tools for iterating on prompts.

The platform is compatible with all Python programs and libraries and comes with integrations for popular libraries and frameworks. This flexibility makes it ideal for a wide range of AI and machine learning projects, particularly those involving conversational AI. Chainlit also includes a ChatGPT-like frontend that can be used out of the box, but users have the option to build their own frontend and use Chainlit as a backend.

For more detailed information and guidance on using Chainlit, you can refer to their official documentation.

What is MistralAI?

Mistral AI is a prominent player in the field of artificial intelligence, particularly known for its advanced language models. The company's flagship product, Mistral 8x7B, is a state-of-the-art language model that demonstrates exceptional capabilities in a wide array of practical applications. This model is notable for its deep understanding of language and its ability to generate human-like text, making it highly suitable for various applications such as content creation, chatbots, and more.

Key features of the Mistral 7B model include:

- Language Understanding: The 7B model from Mistral AI has a deep comprehension of complex text inputs, enabling it to process and understand language at a sophisticated level.

- Text Generation: It excels in generating text that closely resembles human writing, which is essential for applications like content creation and chatbots.

Mistral AI's approach is characterized by its commitment to open models and community-driven development. They release many of their models and deployment tools under permissive licenses, fostering a culture of open science and free software. This approach allows users to have transparent access to the model weights, enabling full customization without requiring users' data.

The Mistral 8x7B model, in particular, is distinguished by its efficiency and adaptability to various use cases. It is significantly faster (reportedly 6x faster) and can match or outperform other prominent models in benchmarks.

Mistral AI has recently made significant strides in the realm of AI language modeling by launching its first AI endpoints, available in early access. These endpoints offer different performance and price trade-offs, and they're primarily focused on generative models and embedding models.

The generative endpoints provided by Mistral AI include:

- Tiny Endpoint (

mistral-tiny): This is the most cost-effective option, suitable for large batch processing tasks where cost is a significant factor. It's powered by Mistral-7B-v0.2 and is currently limited to the English language. - Small Endpoint (

mistral-small): Offering higher reasoning capabilities, this endpoint supports multiple languages including English, French, German, Italian, and Spanish. It's powered by Mixtral-8X7B-v0.1, a sparse mixture of expert models with 12B active parameters. - Medium Endpoint (

mistral-medium): This is currently based on an internal prototype model and represents Mistral AI's highest-quality endpoint.

Additionally, Mistral AI provides an Embedding Model (mistral-embed), which outputs vectors in 1024 dimensions and is designed with retrieval capabilities in mind.

For this article, we will use the mistral-small and mistral-medium endpoints. You will need to register for access and obtain an API key from MistralAI.

Building the Application

First make sure you have Python installed in your system, if not you can download the latest version from here.

Now, we can create our application in simple 5 steps.

Step 1 - Create a project folder and a Python virtual environment

When building an application using Python, it's good practice to start by setting up a Python virtual environment. This environment keeps your project dependencies isolated and organized, ensuring that the project does not interfere with other Python projects on your system.

# Create and move to the new folder

mkdir MistralGPT

cd MistralGPT

# Create a virtual environment

python -m venv venv

# Active the virtual environment (Windows)

.\\venv\\Scripts\\activate.bat

# Active the virtual environment (Linux)

source ./venv/bin/activateCreating a Python virtual environment

Step 2 - Install requirements

Next, you can install the necessary requirements:

pip install chainlit

pip install mistralai

pip install python-decouple # for environment variablesInstalling requirements

Step 3 - Create the Chainlit application

You can now start writing the Chainlit application. For that, you can create a main.py file where you start with this basic code:

import chainlit as cl

@cl.on_chat_start

async def on_chat_start():

await cl.Message(

content=f"Welcome to MistralGPT chat. Please type your first message to get started.",

author="MistralGPT",

).send()

Creating the initial Chainlit application code

Here's a breakdown of its components and functionalities:

- The

@cl.on_chat_startis a decorator provided by Chainlit. Decorators in Python are used to modify or extend the behavior of functions or methods. In this case,@cl.on_chat_startindicates that the function defined immediately below it should be executed when a chat session starts. - Inside the function,

await cl.Message(...).send()is used to send a message to the user at the beginning of the chat. cl.Message(...)constructs a message object. Thecontentargument is a formatted string (f-string) that welcomes the user to the "MistralGPT chat" and prompts them to type their first message.- The

authorargument is set to"MistralGPT", indicating the name of the chatbot or the entity sending the message.

You can run the application by running the command:

chainlit run main.py

It should automatically open a browser window to a screen similar to this one:

This article is for paid members only

To continue reading this article, upgrade your account to get full access.

Subscribe NowAlready have an account? Sign In